Harnessing Amazon Bedrock AI with Serverless AWS Lambda

この記事は公開されてから1年以上経過しています。情報が古い可能性がありますので、ご注意ください。

Introduction

Hello, I’m Hemanth from the Alliance Department. In this blog, I will guide you through the process of integrating AWS Lambda, a serverless compute service, with Amazon Bedrock AI. We will explore how to use these two services together to create a robust AI-powered solution in a serverless environment.

AWS

Amazon Web Services, or AWS, is a cloud service platform that provides content distribution, database storage, processing capacity, and other features to support corporate expansion. AWS has offered a broad range of services in many different categories, including Compute, Storage, Networking, Database, Management Tools, and Security.

AWS Lambda

A serverless compute service which runs code as a reply to events and automatically takes care of the bottom resources. It runs code on high availability compute infrastructure and performs all the administration of the compute resources. A few examples are HTTP requests via Amazon API Gateway, changes to objects in S3, and many others.

Amazon Bedrock

Amazon Bedrock is a fully managed service that enables you to build and scale generative AI applications using foundation models from various AI providers. It allows you to use machine learning models such as language models, text-to-image models, and more, offering a simplified path to AI-powered application development.

Demo

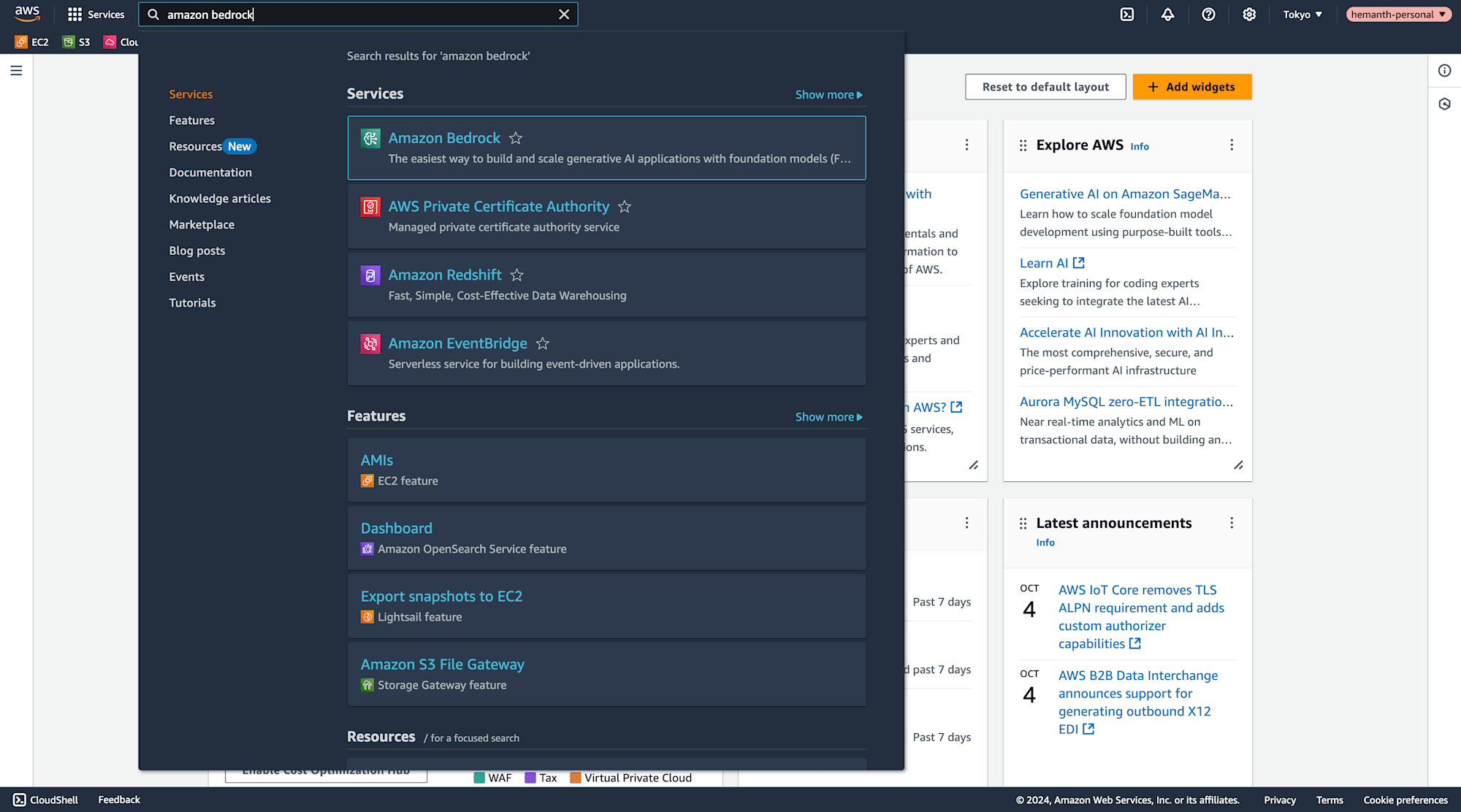

Log in to AWS Management Console. Search for "Amazon Bedrock" in the search bar and select it.

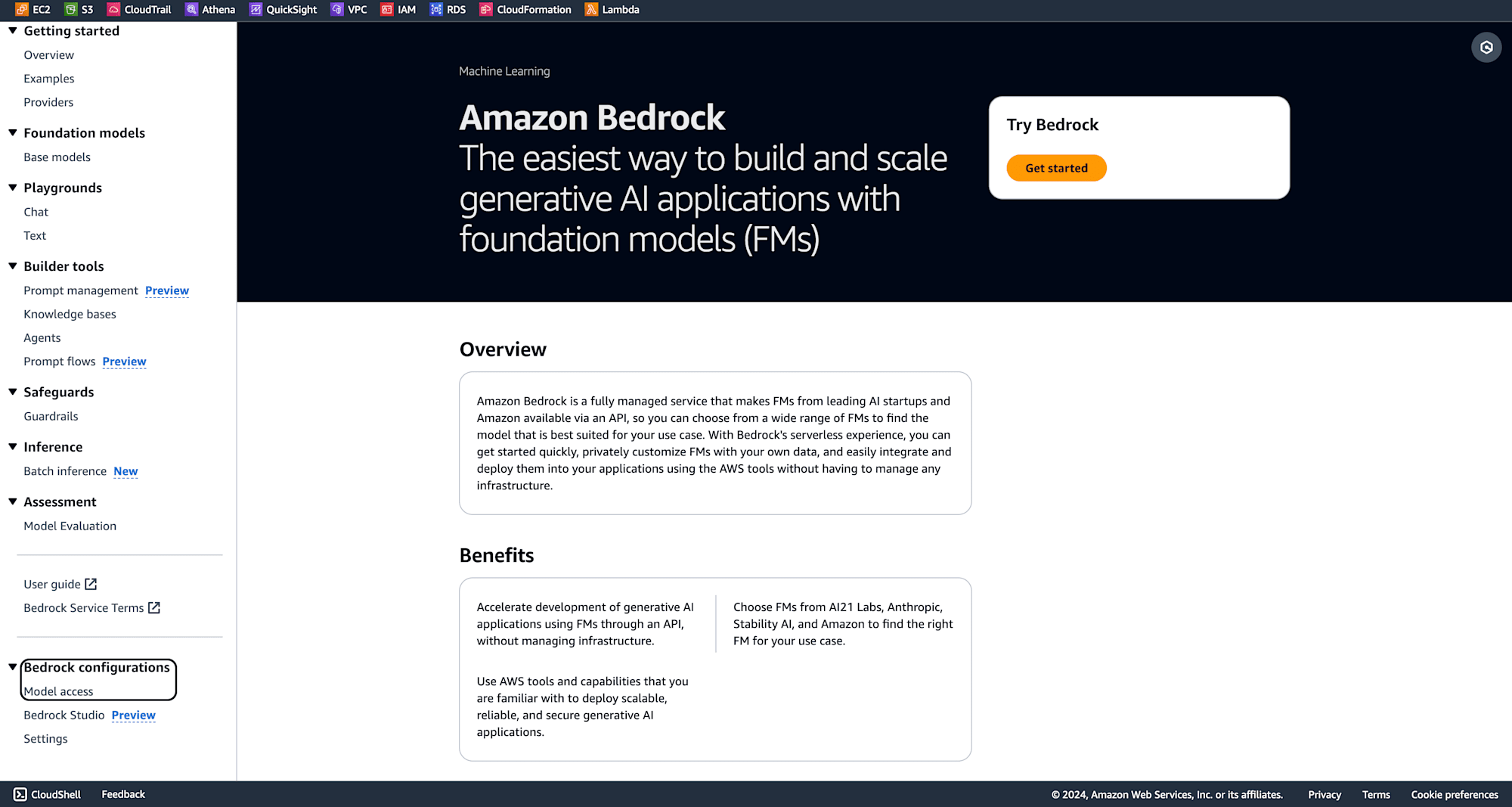

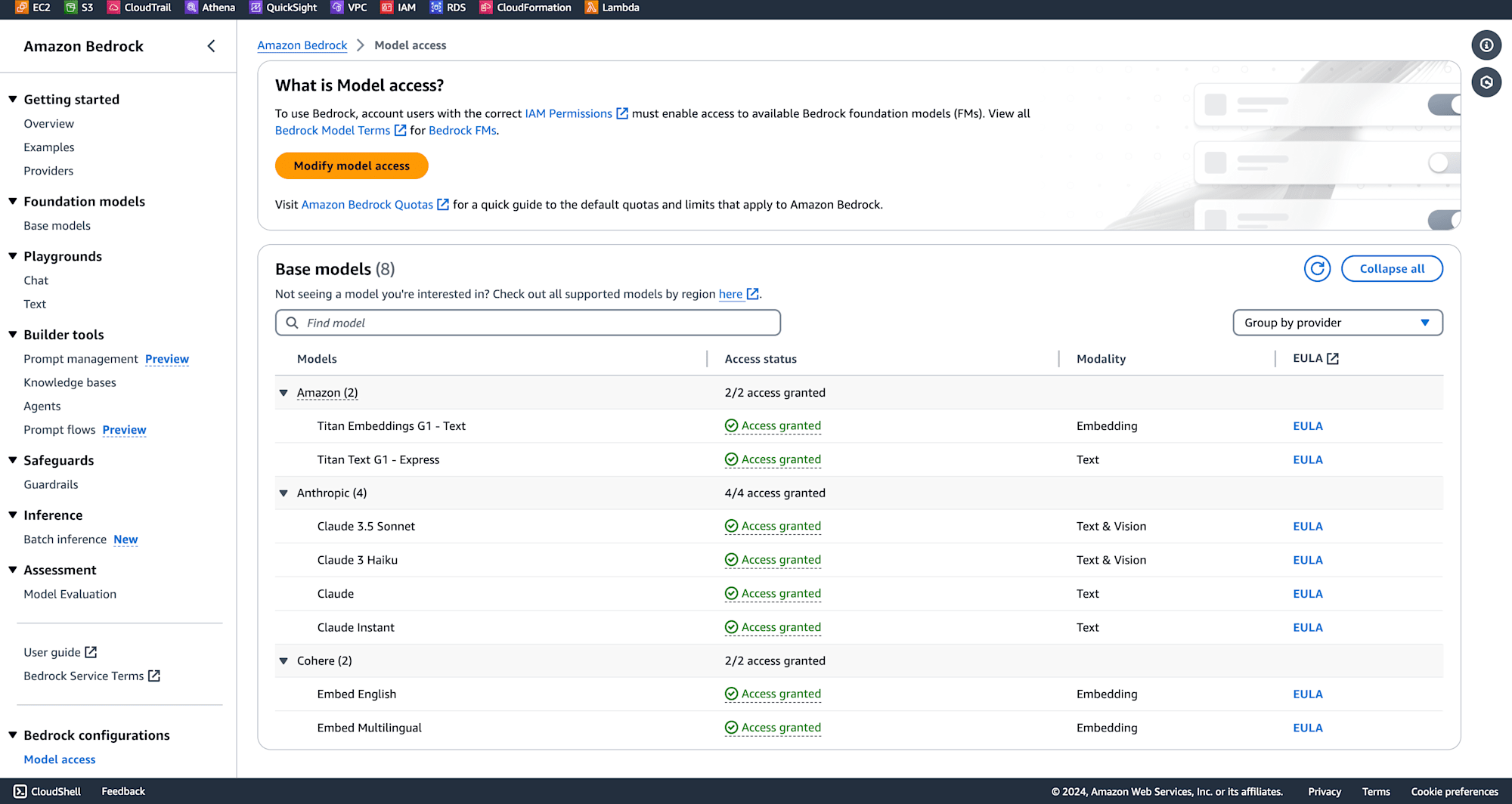

In the Bedrock configurations, navigate to the "Model Access" section.

Here, you can see all the available base models you can work with.

From the AWS Management Console, search for "Lambda" and click on "Create Function."

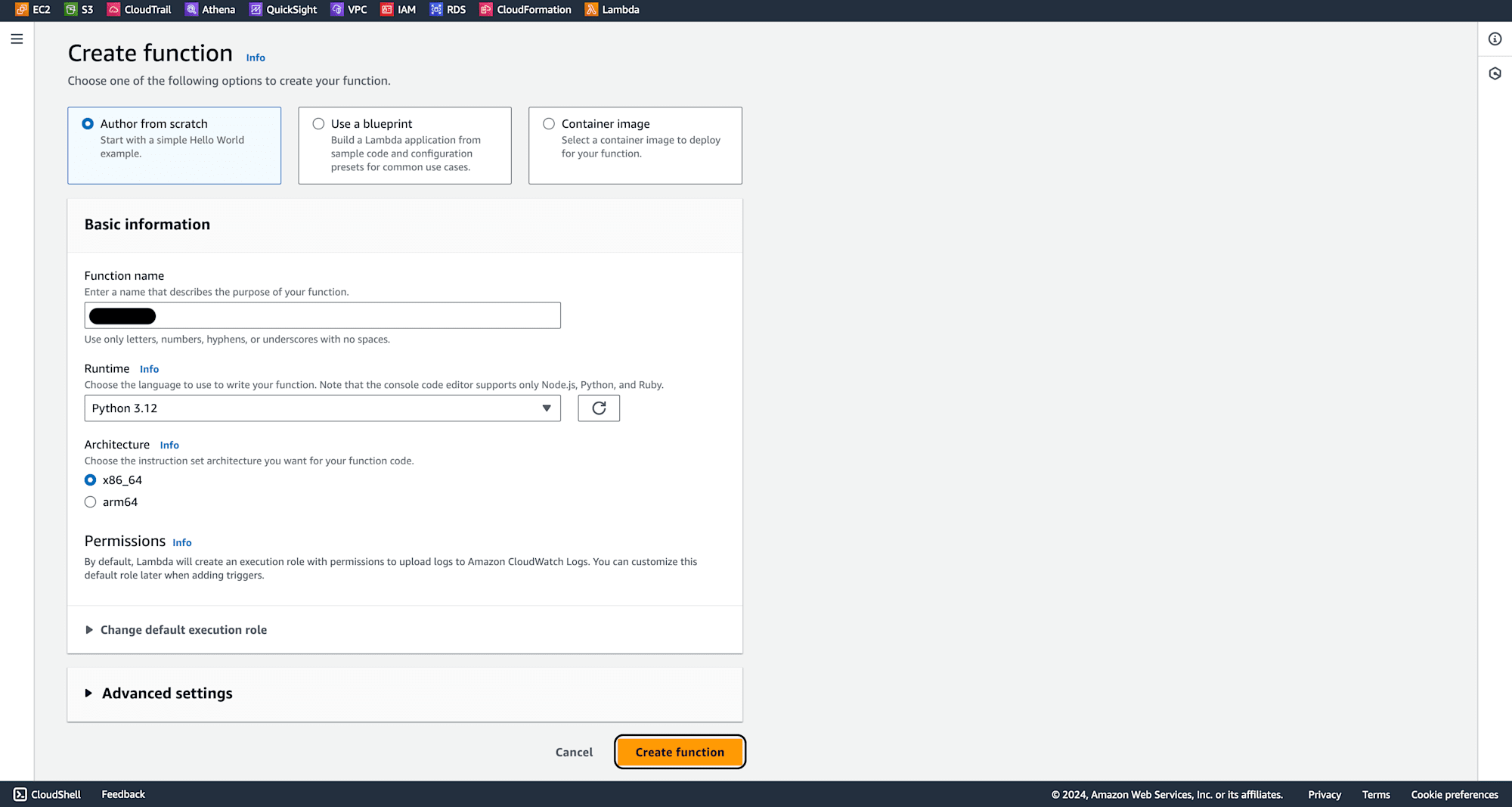

Configure Your Lambda Function, provide a meaningful name. Choose the latest Python version. Keep other options as default and click "Create Function."

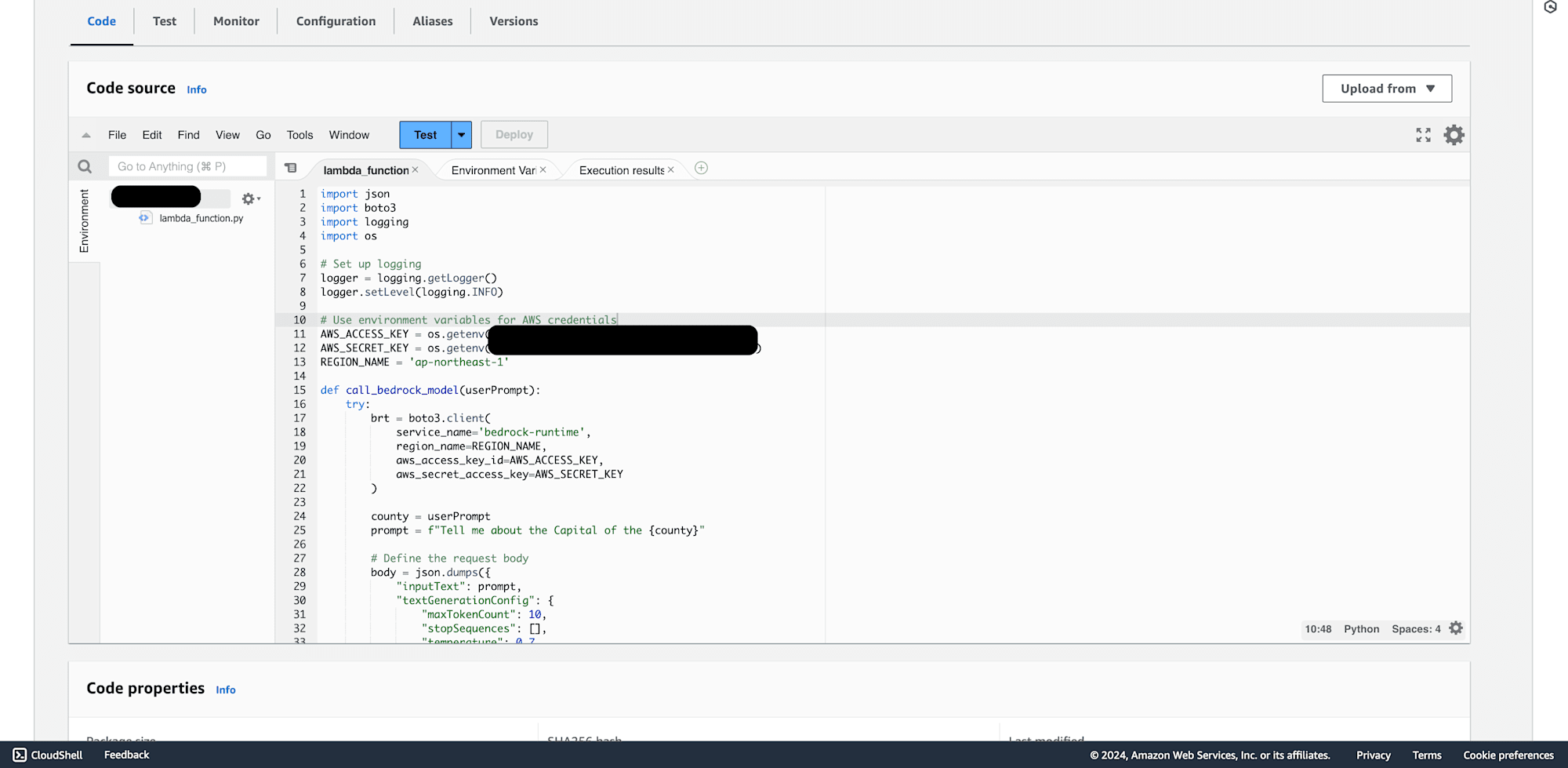

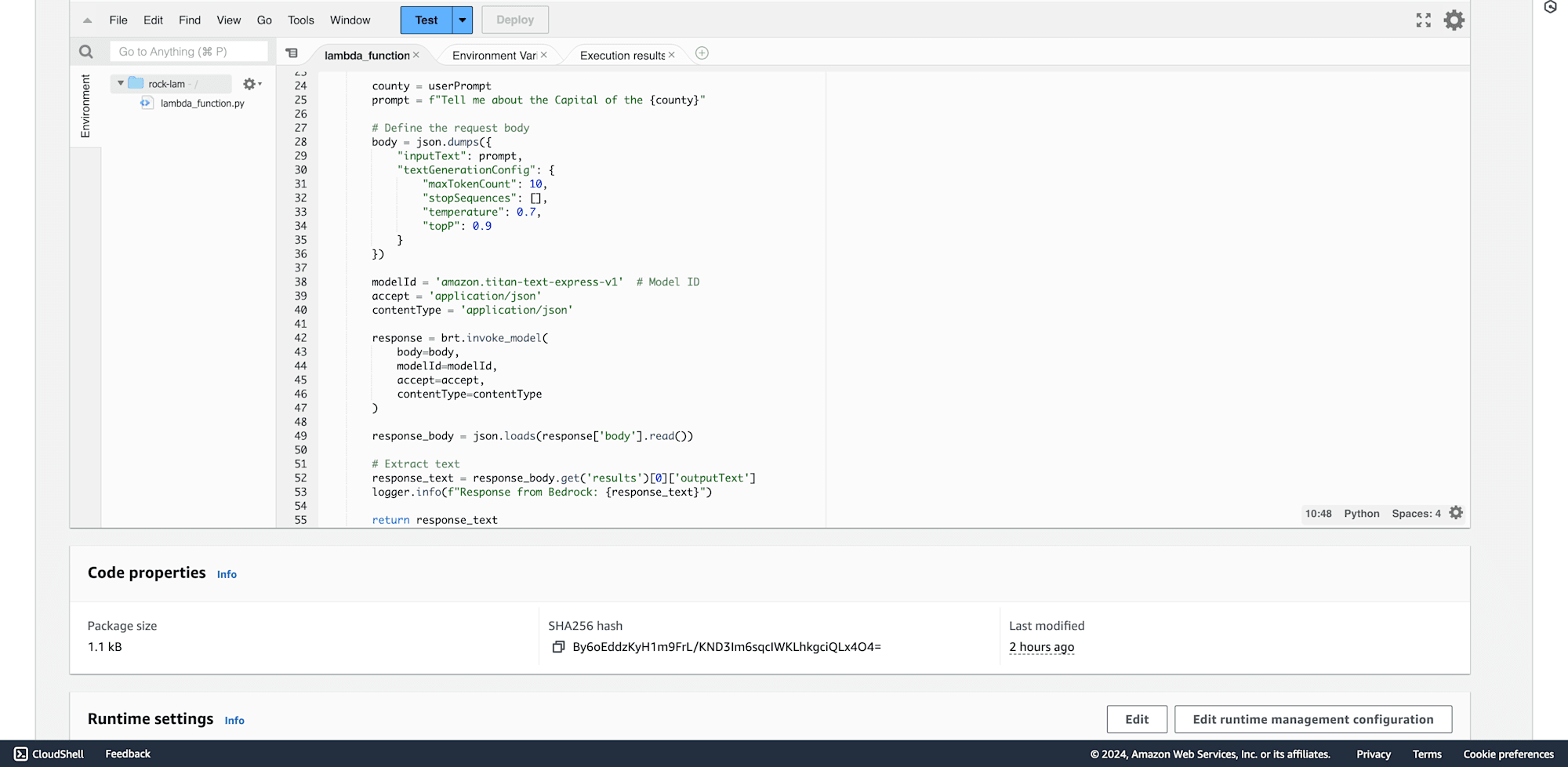

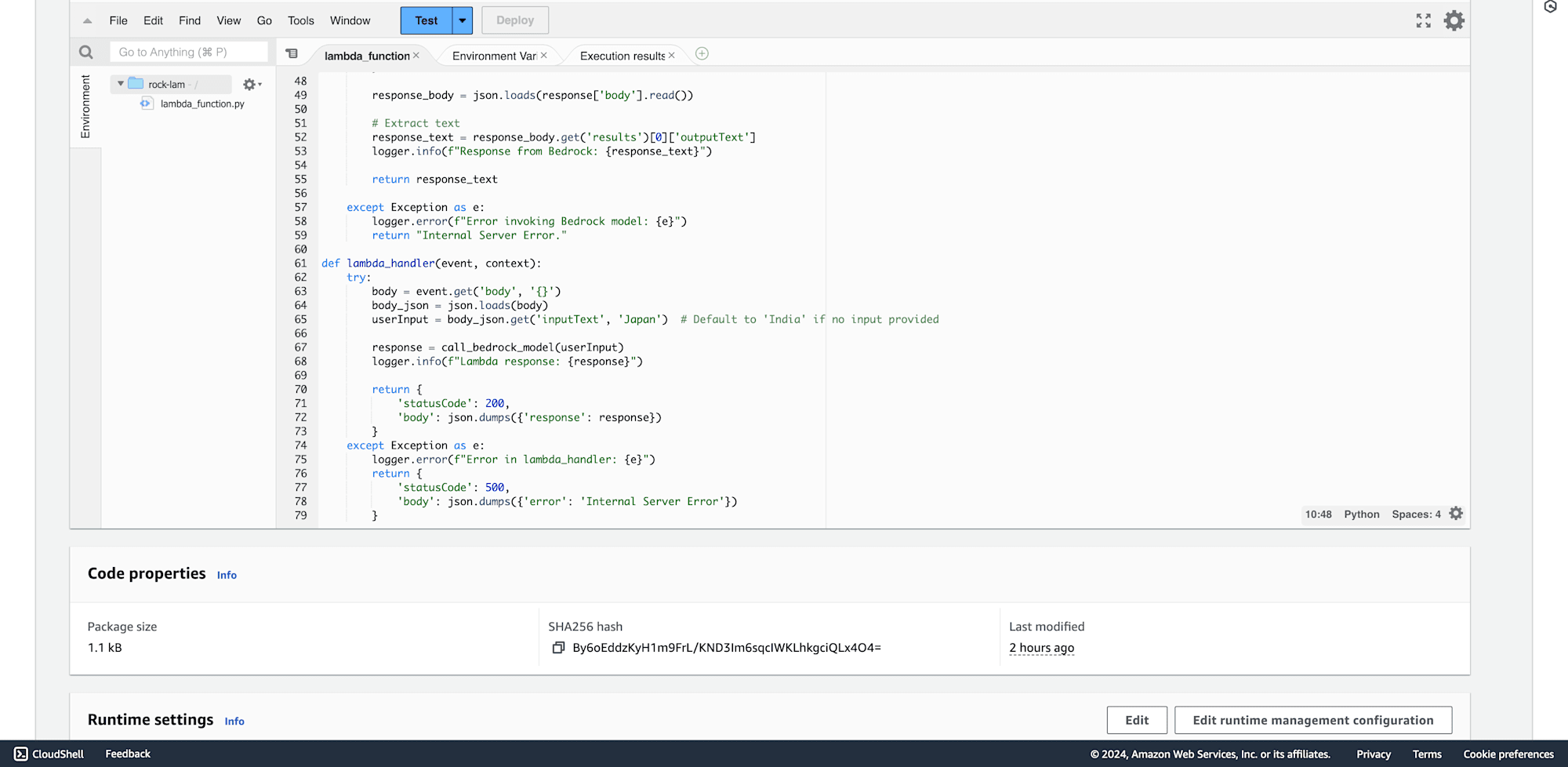

In the Lambda code editor, enter the following Python code. Ensure to add your accessKey, secretKey, and regionName. This will allow Lambda to communicate with Amazon Bedrock. Once the code is entered, click "Deploy."

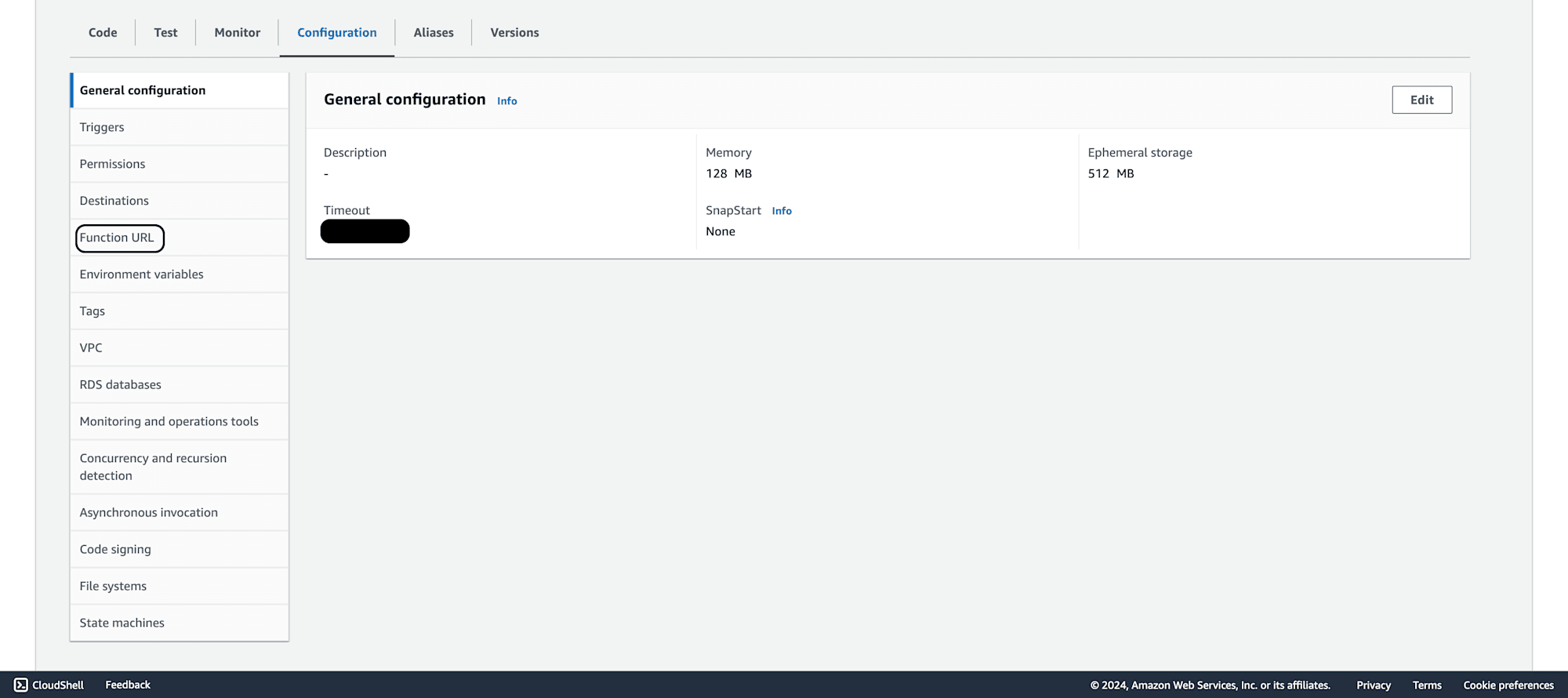

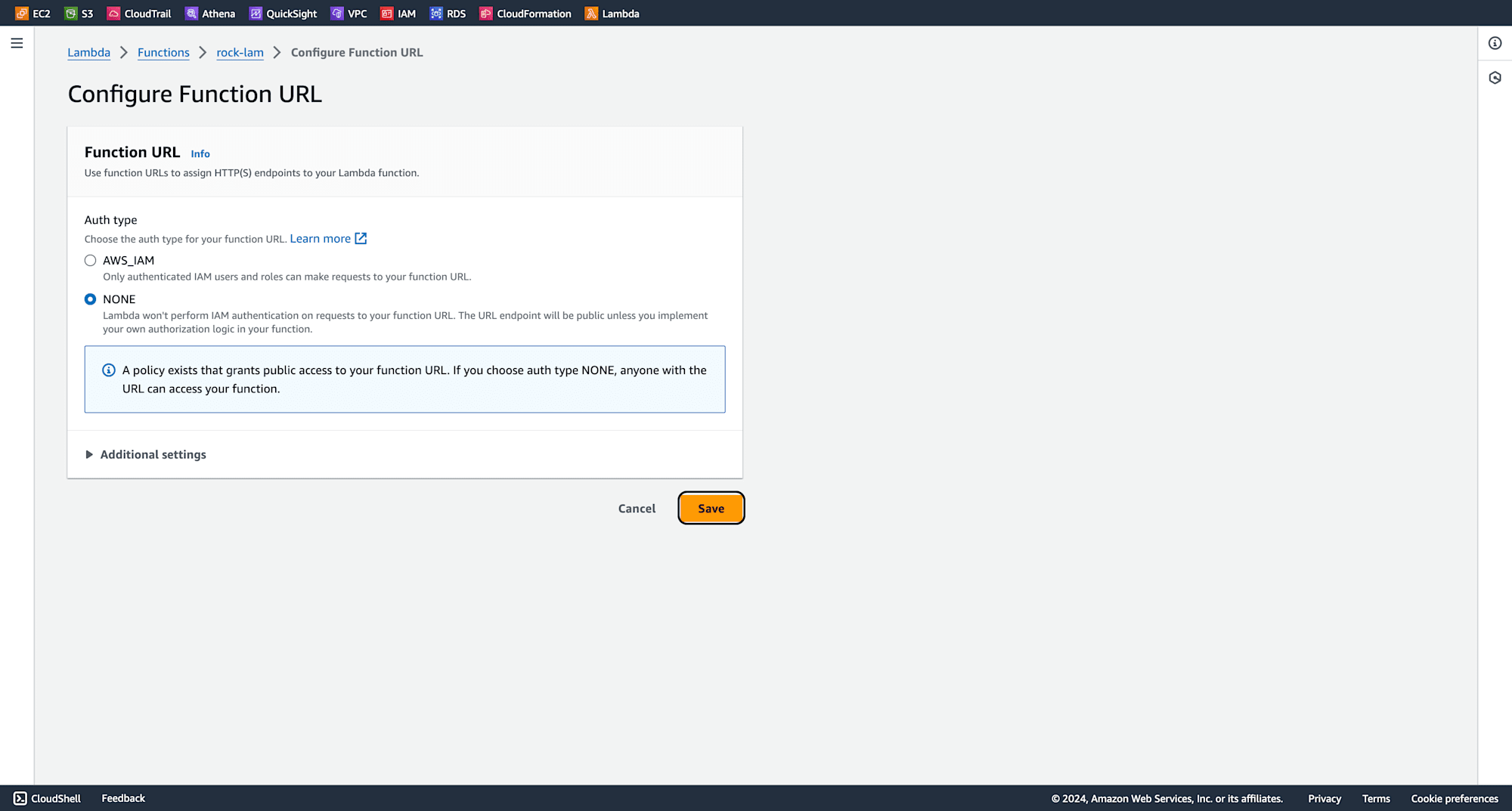

Creating Function URL by navigating to configuration tab.

Select none, click save and your function URL will be created successfully.

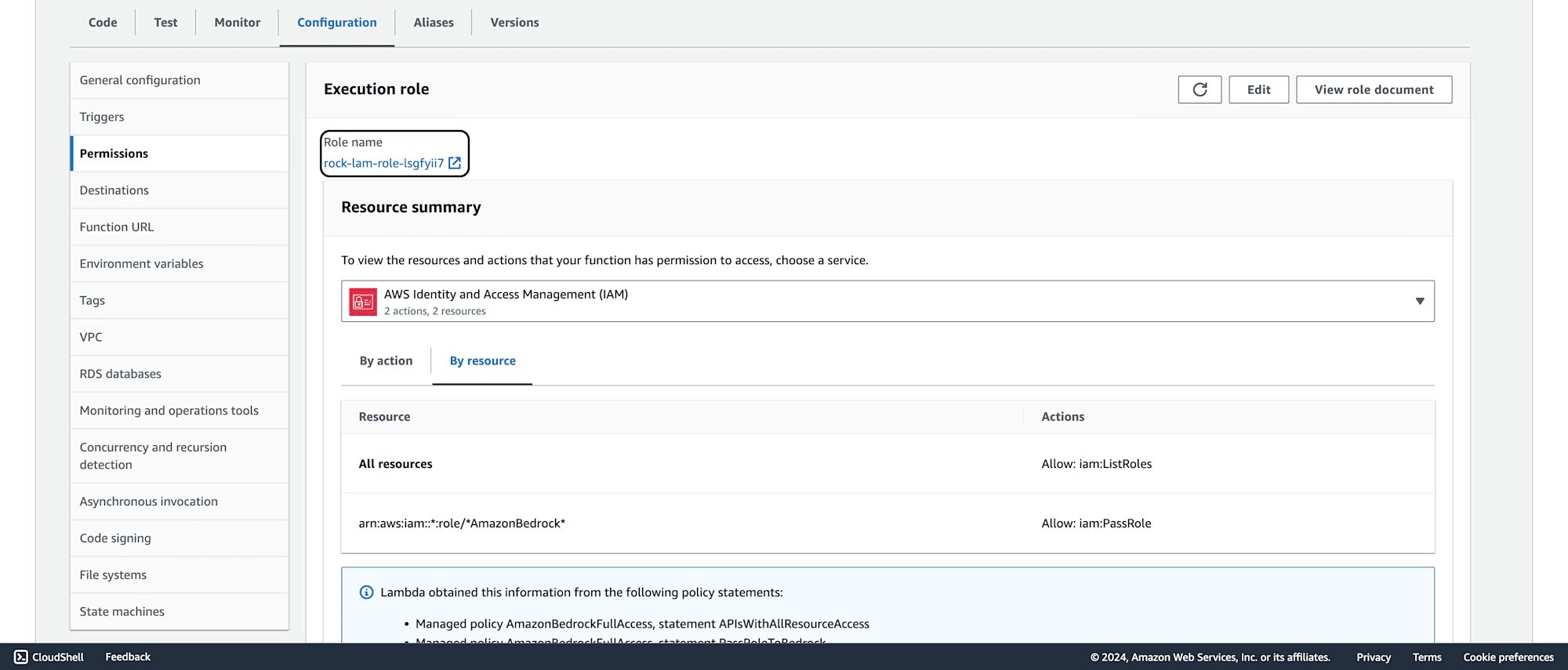

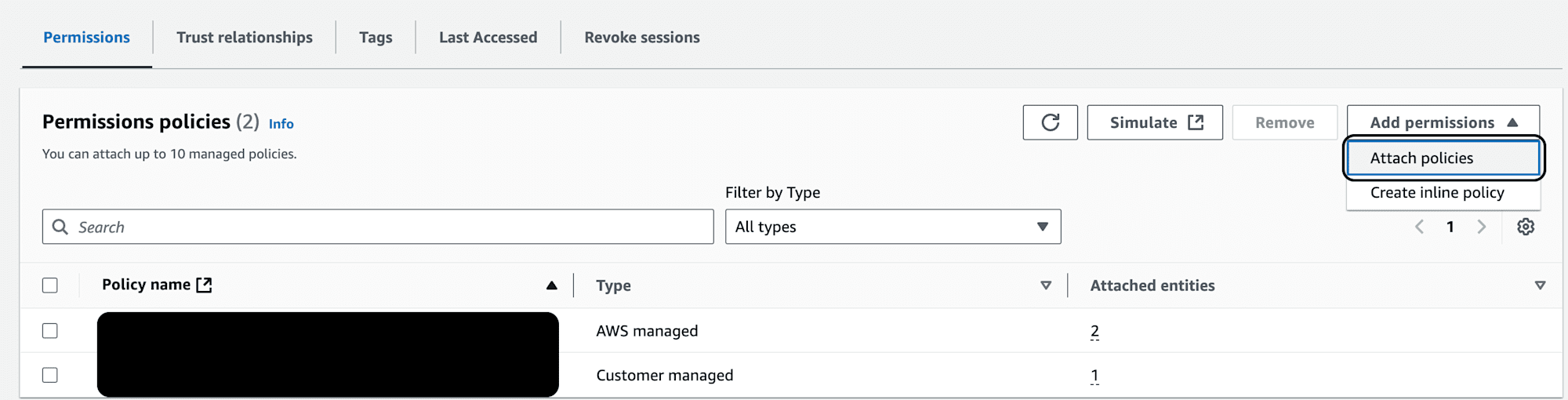

Configure IAM Role, locate the Lambda role associated with your function, and click on the role name.

Select "Attach Policies" and choose AmazonBedrockFullAccess to give your Lambda function the required permissions to access Bedrock.

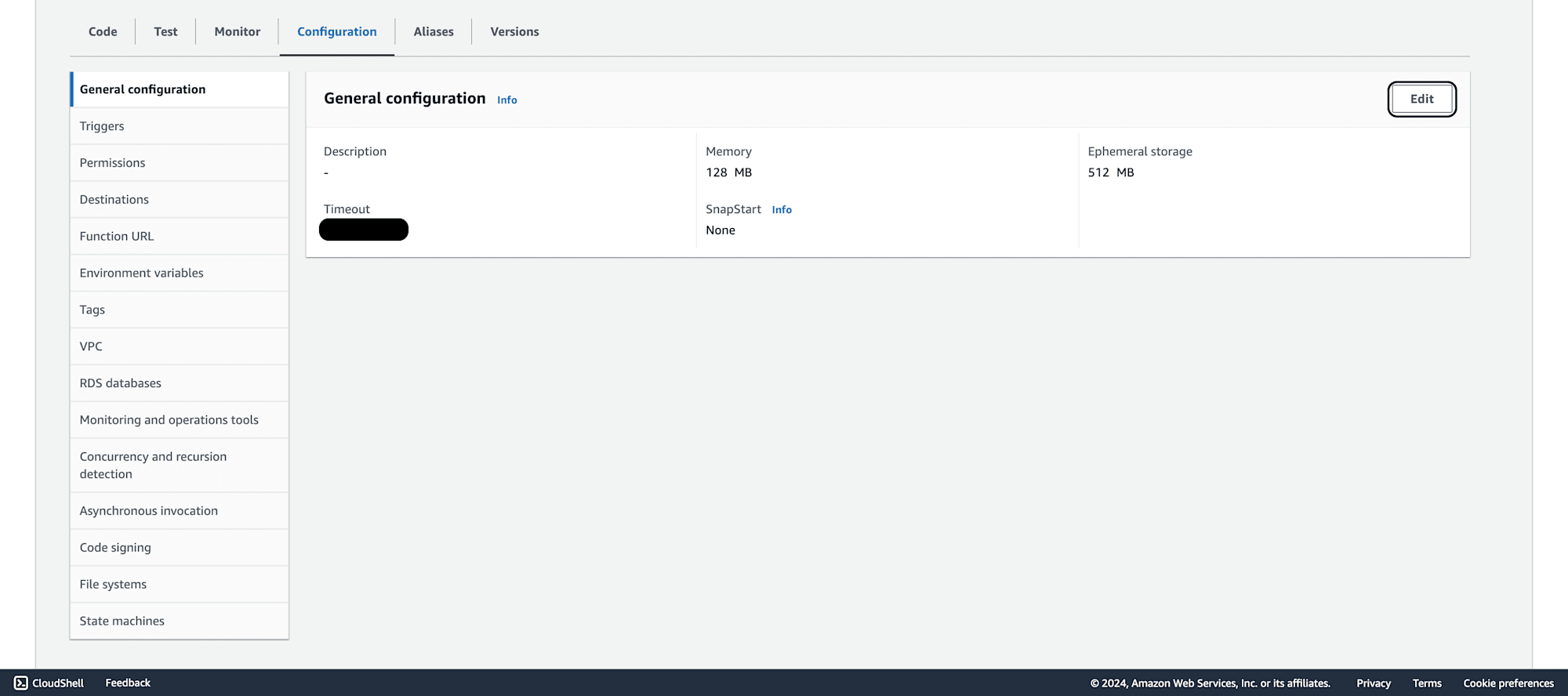

Now going back to lambda in general configuration. click on edit and change timeout to 15 minutes.

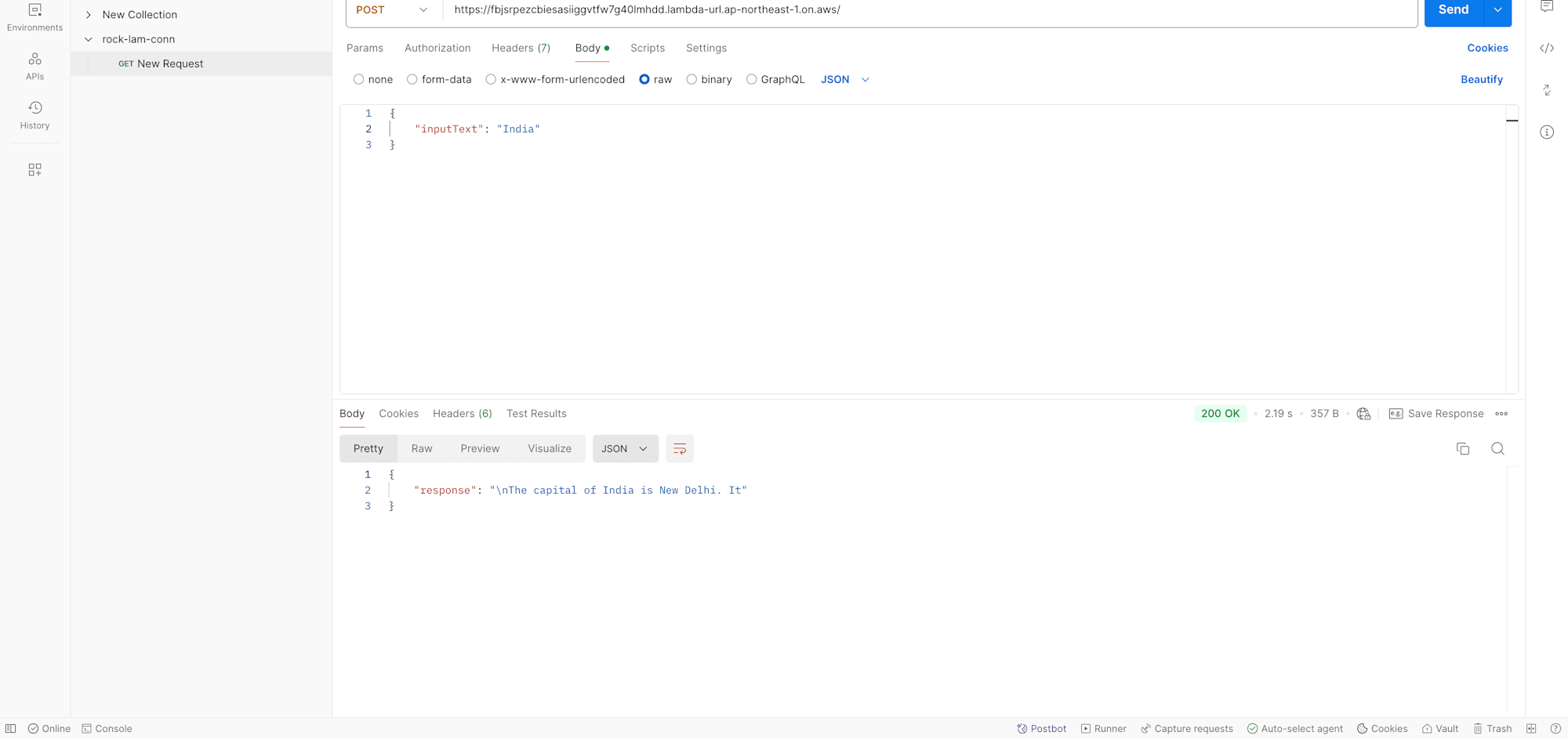

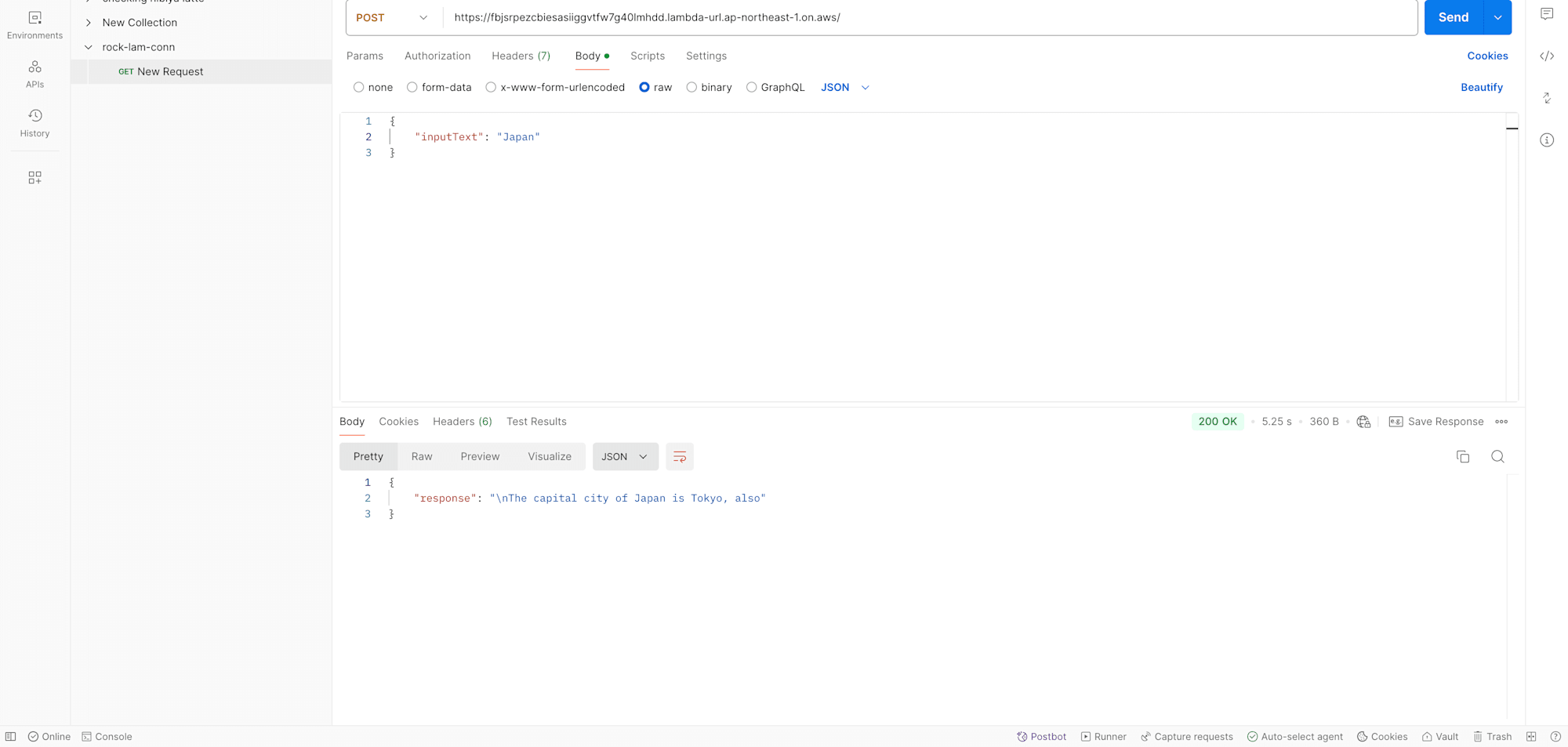

Paste the Function URL into Postman. Choose the POST method in Postman and input the Function URL. In the body, choose raw and select JSON format. Enter the following payload to test the Bedrock model interaction. Hit "Send" to invoke the Lambda function, which will in turn communicate with Amazon Bedrock and return the AI-generated result.

Conclusion

In this blog, we successfully set up a serverless Lambda function to interact with Amazon Bedrock AI, demonstrating how easy it is to create scalable, AI-driven applications. By using AWS Lambda’s event-driven compute service and Amazon Bedrock’s generative AI models, developers can build intelligent solutions without managing servers or infrastructure. This seamless integration empowers businesses to innovate faster and leverage the power of AI in their applications.